Breakthrough Technology Converts Brain Signals to Speech in Near Real-Time

- Mary

- Apr 5, 2025

- 2 min read

UC Berkeley and UCSF Researchers Achieve Major Advancement in Neural Communication

Researchers at UC Berkeley and UC San Francisco have developed a groundbreaking technology that transforms brain signals into speech with unprecedented speed, potentially revolutionizing communication for people with severe paralysis who cannot speak.

The study, published this week in Nature Neuroscience, demonstrates a neural interface that processes thought-to-speech signals nearly eight times faster than previous systems, approaching real-time communication capabilities.

How the Technology Works

The innovative neuroprosthesis leverages technology similar to voice recognition systems found in consumer

devices like Alexa and Siri, but with a crucial difference: it captures neural signals directly from the brain.

"We're intercepting the signal midway through the process of converting thought into articulation," explains lead author Cheol-Jun Cho, a UC Berkeley PhD student. "That is, after you've decided what to say and what words to use, you decode the signal."

Technical Process Brain Signals

The system works by:

Capturing brain signals in 80-millisecond increments immediately after a person forms the intention to speak

Processing these electroencephalogram (ECoG) signals through a neural encoder

Converting the encoded data into sound using a deep learning recurrent neural network model

Utilizing pre-injury voice recordings to create more natural-sounding output

This approach significantly reduces processing time, bringing the technology closer to practical application. Previous systems required approximately eight seconds to produce a single sentence; the new technology performs the same task in roughly one second.

Current Limitations and Future Directions

At present, the device requires a direct electrical connection to the brain, limiting its immediate applications. However, the research team is exploring ways to adapt the approach to other interfaces, including surgically implanted microelectrode arrays (MEAs) and non-invasive surface electromyography (SEMG).

The project builds upon earlier research that was previously sponsored by Facebook but later discontinued.

Edward Chang, chairman of UCSF's Department of Neurosurgery, serves as senior co-principal investigator on the current study.

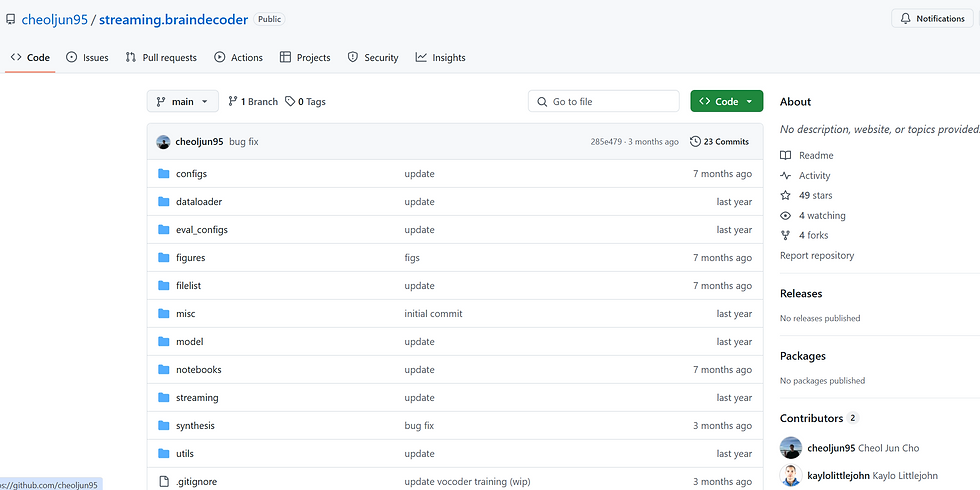

In a move that could accelerate further advancements in the field, the research team has made their code publicly available on GitHub, allowing other scientists to build upon their work.

This breakthrough represents a significant step forward in assistive communication technology, potentially offering new hope for patients with conditions like amyotrophic lateral sclerosis (ALS), stroke, or other forms of paralysis that affect speech.

Comments